With Facebook AI this can control groups to stay healthy.

Social conversations consciously or unconsciously can quickly spiral out of control when we're in online media, so Facebook is experimenting with artificial intelligence that can help keep things polite.

Facebook The world's largest social network is testing the use of Artificial Intelligence AI for groups.

to find quarrels in many of his groups so that the group administrator can help calm things down.

The announcement appeared in a blog post Wednesday.

Facebook is rolling out a host of new software to help the more than 70 million people running and upgrading groups on its platform.

Facebook, which has 2.85 billion monthly users, It said late last year that more than 1.8 billion people participate in groups every month, and there are tens of millions of active groups on social networks.

Also Read: How to Create a Blue Tick Twitter Account 2021

Along with the addition of Facebook's AI,

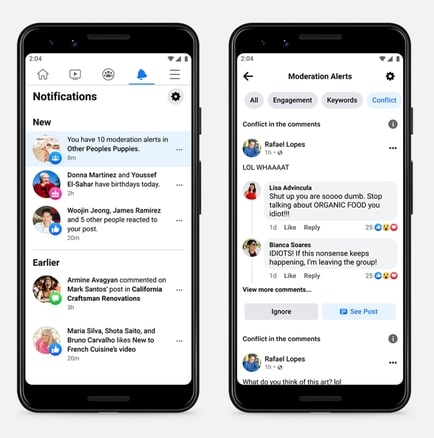

Ai will decide when to send what companies call “conflict warnings” to those who manage and own groups.

An alert will be sent to the group administrator if the AI finds that the conversation in the group they manage or have

“Arguing unhealthily,” the company said.

Over the years, technology platforms like Facebook and Twitter

Increasingly relying on AI to determine and discover many things you see online, from tools that detect and remove hate speech on Facebook (FB) to tweets that appear on Twitter (TWTR) on your timeline.

This can be helpful in deciding what content users don't want to see,

Ai can help human moderators clean up social networks that have grown too big to be monitored by humans.

But AI can also fail to understand subtlety and context in online postings. How AI-based moderation systems work can also seem mysterious and painful to users.

A Facebook spokesman said the company's AI would use some signals from conversations to determine when to send conflict alerts.

including comment reply time and comment volume on a post.

He said some administrators are already setting up keyword alerts that can find topics that could lead to arguments of potential conflict as well.

Also Read: How to Reveal Criminals in Cyberspace

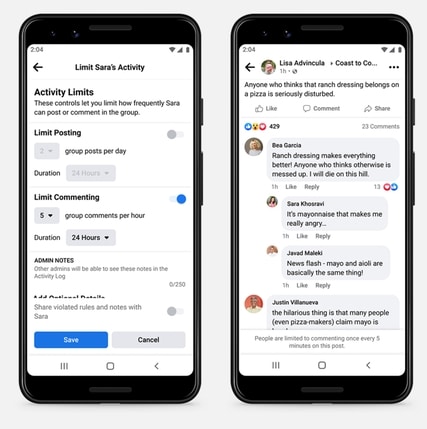

If the administrator receives a conflict warning,

They may take actions that Facebook says are aimed at slowing down the conversation—perhaps in hopes of calming users down.

These measures may include temporarily limiting how often some group members

You can post comments and determine how quickly comments can be made on each post.

Screenshot of a mock argument that Facebook uses to show how this could work showing conversations that go off track in a so-called group

“Someone Else's Puppy,” to which one user responded to another person's post by writing, “Shut up you're so stupid. Stop talking about stupid basic ORGANIC FOOD!!!”

“IDIOT!” responded another user in the example. “If this nonsense keeps happening, I'll leave the group!”

The conversation appears on the screen with the words “Moderation Warning” at the top,

Underneath a few words appeared in a black type inside a gray bubble. All the way to the right, the word “Conflict” appears in blue, in blue bubbles.

Another collection of screen images illustrates how an administrator might respond to a heated conversation

not about politics, vaccinations, or culture wars, but the benefits of ranch sauce and mayonnaise—by limiting a member to post,

say, five comments in a group post for the next day.

Source: CNN.com